|

Board Game Prediction using |

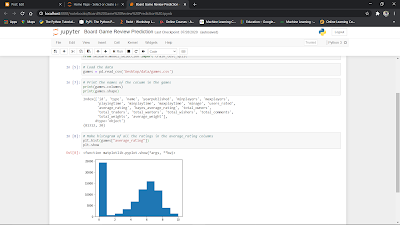

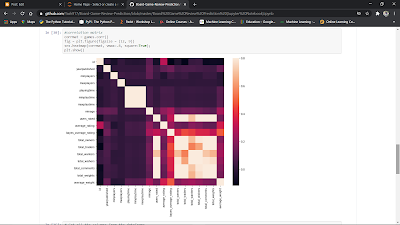

1. Importing Libraries and Loading the Data After the .csv file 'games.csv' has been copied to the current directory, we can import the data as a Pandas DataFrame. As a DataFrame, we will be able to easily explore the type, amount, and distribution of data. Furthermore, using a correlation matrix, we can explore the relationships between parameters. This is an important step in determining the type of machine learning algorithm to utilize.

import sys

import pandas

import matplotlib

import seaborn

import sklearn

# Read in the data.

games = pandas.read_csv("games.csv")

# Print the names of the columns in games.

print(games.columns)

print(games.shape)

# Make a histogram of all the ratings in the average_rating column.

plt.hist(games["average_rating"])

# Show the plot.

plt.show()

# games[games["average_rating"] == 0]

# Print the first row of all the games with zero scores.

# The .iloc method on dataframes allows us to index by position.

print(games[games["average_rating"] == 0].iloc[0])

# Print the first row of all the games with scores greater than 0.

print(games[games["average_rating"] > 0].iloc[0])

# Remove any rows without user reviews.

games = games[games["users_rated"] > 0]

# Remove any rows with missing values.

games = games.dropna(axis=0)

# Make a histogram of all the ratings in the average_rating column.

plt.hist(games["average_rating"])

# Show the plot.

plt.show()

#correlation matrix

corrmat = games.corr()

fig = plt.figure(figsize = (12, 9))

sns.heatmap(corrmat, vmax=.8, square=True);

plt.show()

# Get all the columns from the dataframe.

columns = games.columns.tolist()

# Filter the columns to remove ones we don't want.

columns = [c for c in columns if c not in ["bayes_average_rating", "average_rating", "type", "name", "id"]]

# Store the variable we'll be predicting on.

target = "average_rating"

2. Linear Regression

In the following cells, we will deploy a simple linear regression model to predict the average review of each board game. We will use the mean squared error as a performance metric. Furthermore, we will compare and contrast these results with the performance of an ensemble method.

# Import a convenience function to split the sets.

from sklearn.cross_validation import train_test_split

# Generate the training set. Set random_state to be able to replicate results.

train = games.sample(frac=0.8, random_state=1)

# Select anything not in the training set and put it in the testing set.

test = games.loc[~games.index.isin(train.index)]

# Print the shapes of both sets.

print(train.shape)

print(test.shape)

# Import the linear regression model.

from sklearn.linear_model import LinearRegression

# Initialize the model class.

model = LinearRegression()

# Fit the model to the training data.

model.fit(train[columns], train[target])

# Import the scikit-learn function to compute error.

from sklearn.metrics import mean_squared_error

# Generate our predictions for the test set.

predictions = model.predict(test[columns])

# Compute error between our test predictions and the actual values.

mean_squared_error(predictions, test[target])

# Import the random forest model.

from sklearn.ensemble import RandomForestRegressor

# Initialize the model with some parameters.

model = RandomForestRegressor(n_estimators=100, min_samples_leaf=10, random_state=1)

# Fit the model to the data.

model.fit(train[columns], train[target])

# Make predictions.

predictions = model.predict(test[columns])

# Compute the error.

mean_squared_error(predictions, test[target])

0 comments:

Post a Comment